一、pytorch finetuning 自己的图片进行训练

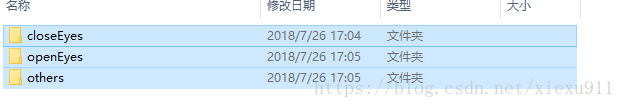

这种读取图片的方式用的是torch自带的 ImageFolder,读取的文件夹必须在一个大的子文件下,按类别归好类。

就像我现在要区分三个类别。

#perpare data set

#train data

train_data=torchvision.datasets.ImageFolder('F:/eyeDataSet/trainData',transform=transforms.Compose(

[

transforms.Scale(256),

transforms.CenterCrop(224),

transforms.ToTensor()

]))

print(len(train_data))

train_loader=DataLoader(train_data,batch_size=20,shuffle=True)

然后就是fine tuning自己的网络,在torch中可以对整个网络修改后,训练全部的参数也可以只训练其中的一部分,我这里就只训练最后一个全连接层。

torchvision中提供了很多常用的模型,比如resnet ,Vgg,Alexnet等等

# prepare model

mode1_ft_res18=torchvision.models.resnet18(pretrained=True)

for param in mode1_ft_res18.parameters():

param.requires_grad=False

num_fc=mode1_ft_res18.fc.in_features

mode1_ft_res18.fc=torch.nn.Linear(num_fc,3)

定义自己的优化器,注意这里的参数只传入最后一层的

#loss function and optimizer

criterion=torch.nn.CrossEntropyLoss()

#parameters only train the last fc layer

optimizer=torch.optim.Adam(mode1_ft_res18.fc.parameters(),lr=0.001)

然后就可以开始训练了,定义好各种参数。

#start train

#label not one-hot encoder

EPOCH=1

for epoch in range(EPOCH):

train_loss=0.

train_acc=0.

for step,data in enumerate(train_loader):

batch_x,batch_y=data

batch_x,batch_y=Variable(batch_x),Variable(batch_y)

#batch_y not one hot

#out is the probability of eatch class

# such as one sample[-1.1009 0.1411 0.0320],need to calculate the max index

# out shape is batch_size * class

out=mode1_ft_res18(batch_x)

loss=criterion(out,batch_y)

train_loss+=loss.data[0]

# pred is the expect class

#batch_y is the true label

pred=torch.max(out,1)[1]

train_correct=(pred==batch_y).sum()

train_acc+=train_correct.data[0]

optimizer.zero_grad()

loss.backward()

optimizer.step()

if step%14==0:

print('Epoch: ',epoch,'Step',step,

'Train_loss: ',train_loss/((step+1)*20),'Train acc: ',train_acc/((step+1)*20))

测试部分和训练部分类似这里就不一一说明。

这样就完整了对自己网络的训练测试,完整代码如下:

import torch

import numpy as np

import torchvision

from torchvision import transforms,utils

from torch.utils.data import DataLoader

from torch.autograd import Variable

#perpare data set

#train data

train_data=torchvision.datasets.ImageFolder('F:/eyeDataSet/trainData',transform=transforms.Compose(

[

transforms.Scale(256),

transforms.CenterCrop(224),

transforms.ToTensor()

]))

print(len(train_data))

train_loader=DataLoader(train_data,batch_size=20,shuffle=True)

#test data

test_data=torchvision.datasets.ImageFolder('F:/eyeDataSet/testData',transform=transforms.Compose(

[

transforms.Scale(256),

transforms.CenterCrop(224),

transforms.ToTensor()

]))

test_loader=DataLoader(test_data,batch_size=20,shuffle=True)

# prepare model

mode1_ft_res18=torchvision.models.resnet18(pretrained=True)

for param in mode1_ft_res18.parameters():

param.requires_grad=False

num_fc=mode1_ft_res18.fc.in_features

mode1_ft_res18.fc=torch.nn.Linear(num_fc,3)

#loss function and optimizer

criterion=torch.nn.CrossEntropyLoss()

#parameters only train the last fc layer

optimizer=torch.optim.Adam(mode1_ft_res18.fc.parameters(),lr=0.001)

#start train

#label not one-hot encoder

EPOCH=1

for epoch in range(EPOCH):

train_loss=0.

train_acc=0.

for step,data in enumerate(train_loader):

batch_x,batch_y=data

batch_x,batch_y=Variable(batch_x),Variable(batch_y)

#batch_y not one hot

#out is the probability of eatch class

# such as one sample[-1.1009 0.1411 0.0320],need to calculate the max index

# out shape is batch_size * class

out=mode1_ft_res18(batch_x)

loss=criterion(out,batch_y)

train_loss+=loss.data[0]

# pred is the expect class

#batch_y is the true label

pred=torch.max(out,1)[1]

train_correct=(pred==batch_y).sum()

train_acc+=train_correct.data[0]

optimizer.zero_grad()

loss.backward()

optimizer.step()

if step%14==0:

print('Epoch: ',epoch,'Step',step,

'Train_loss: ',train_loss/((step+1)*20),'Train acc: ',train_acc/((step+1)*20))

#print('Epoch: ', epoch, 'Train_loss: ', train_loss / len(train_data), 'Train acc: ', train_acc / len(train_data))

# test model

mode1_ft_res18.eval()

eval_loss=0

eval_acc=0

for step ,data in enumerate(test_loader):

batch_x,batch_y=data

batch_x,batch_y=Variable(batch_x),Variable(batch_y)

out=mode1_ft_res18(batch_x)

loss = criterion(out, batch_y)

eval_loss += loss.data[0]

# pred is the expect class

# batch_y is the true label

pred = torch.max(out, 1)[1]

test_correct = (pred == batch_y).sum()

eval_acc += test_correct.data[0]

optimizer.zero_grad()

loss.backward()

optimizer.step()

print( 'Test_loss: ', eval_loss / len(test_data), 'Test acc: ', eval_acc / len(test_data))

二、PyTorch 利用预训练模型进行Fine-tuning

在Deep Learning领域,很多子领域的应用,比如一些动物识别,食物的识别等,公开的可用的数据库相对于ImageNet等数据库而言,其规模太小了,无法利用深度网络模型直接train from scratch,容易引起过拟合,这时就需要把一些在大规模数据库上已经训练完成的模型拿过来,在目标数据库上直接进行Fine-tuning(微调),这个已经经过训练的模型对于目标数据集而言,只是一种相对较好的参数初始化方法而已,尤其是大数据集与目标数据集结构比较相似的话,经过在目标数据集上微调能够得到不错的效果。

Fine-tune预训练网络的步骤:

1. 首先更改预训练模型分类层全连接层的数目,因为一般目标数据集的类别数与大规模数据库的类别数不一致,更改为目标数据集上训练集的类别数目即可,一致的话则无需更改;

2. 把分类器前的网络的所有层的参数固定,即不让它们参与学习,不进行反向传播,只训练分类层的网络,这时学习率可以设置的大一点,如是原来初始学习率的10倍或几倍或0.01等,这时候网络训练的比较快,因为除了分类层,其它层不需要进行反向传播,可以多尝试不同的学习率设置。

3.接下来是设置相对较小的学习率,对整个网络进行训练,这时网络训练变慢啦。

下面对利用PyTorch深度学习框架Fine-tune预训练网络的过程中涉及到的固定可学习参数,对不同的层设置不同的学习率等进行详细讲解。

1. PyTorch对某些层固定网络的可学习参数的方法:

class Net(nn.Module):

def __init__(self, num_classes=546):

super(Net, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(1, 64, kernel_size=3, stride=2, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

)

self.Conv1_1 = nn.Sequential(

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

)

for p in self.parameters():

p.requires_grad=False

self.Conv1_2 = nn.Sequential(

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

)

如上述代码,则模型Net网络中self.features与self.Conv1_1层中的参数便是固定,不可学习的。这主要看代码:

for p in self.parameters():

p.requires_grad=False

插入的位置,这段代码前的所有层的参数是不可学习的,也就没有反向传播过程。也可以指定某一层的参数不可学习,如下:

for p in self.features.parameters():

p.requires_grad=False

则 self.features层所有参数均是不可学习的。

注意,上述代码设置若要真正生效,在训练网络时需要在设置优化器如下:

optimizer = torch.optim.SGD(filter(lambda p: p.requires_grad, model.parameters()), args.lr,

momentum=args.momentum,

weight_decay=args.weight_decay)

2. PyTorch之为不同的层设置不同的学习率

model = Net()

conv1_2_params = list(map(id, model.Conv1_2.parameters()))

base_params = filter(lambda p: id(p) not in conv1_2_params,

model.parameters())

optimizer = torch.optim.SGD([

{'params': base_params},

{'params': model.Conv1_2.parameters(), 'lr': 10 * args.lr}], args.lr,

momentum=args.momentum, weight_decay=args.weight_decay)

上述代码表示将模型Net网络的 self.Conv1_2层的学习率设置为传入学习率的10倍,base_params的学习没有明确设置,则默认为传入的学习率args.lr。

注意:

[{'params': base_params}, {'params': model.Conv1_2.parameters(), 'lr': 10 * args.lr}]

表示为列表中的字典结构。

这种方法设置不同的学习率显得不够灵活,可以为不同的层设置灵活的学习率,可以采用如下方法在adjust_learning_rate函数中设置:

def adjust_learning_rate(optimizer, epoch, args):

lre = []

lre.extend([0.01] * 10)

lre.extend([0.005] * 10)

lre.extend([0.0025] * 10)

lr = lre[epoch]

optimizer.param_groups[0]['lr'] = 0.9 * lr

optimizer.param_groups[1]['lr'] = 10 * lr

print(param_group[0]['lr'])

print(param_group[1]['lr'])

上述代码中的optimizer.param_groups[0]就代表[{'params': base_params}, {'params': model.Conv1_2.parameters(), 'lr': 10 * args.lr}]中的'params': base_params},optimizer.param_groups[1]代表{'params': model.Conv1_2.parameters(), 'lr': 10 * args.lr},这里设置的学习率会把args.lr给覆盖掉,个人认为上述代码在设置学习率方面更灵活一些。上述代码也可如下变成实现(注意学习率随便设置的,未与上述代码保持一致):

def adjust_learning_rate(optimizer, epoch, args):

lre = np.logspace(-2, -4, 40)

lr = lre[epoch]

for i in range(len(optimizer.param_groups)):

param_group = optimizer.param_groups[i]

if i == 0:

param_group['lr'] = 0.9 * lr

else:

param_group['lr'] = 10 * lr

print(param_group['lr'])

下面贴出SGD优化器的PyTorch实现,及其每个参数的设置和表示意义,具体如下:

import torch

from .optimizer import Optimizer, required

class SGD(Optimizer):

r"""Implements stochastic gradient descent (optionally with momentum).

Nesterov momentum is based on the formula from

`On the importance of initialization and momentum in deep learning`__.

Args:

params (iterable): iterable of parameters to optimize or dicts defining

parameter groups

lr (float): learning rate

momentum (float, optional): momentum factor (default: 0)

weight_decay (float, optional): weight decay (L2 penalty) (default: 0)

dampening (float, optional): dampening for momentum (default: 0)

nesterov (bool, optional): enables Nesterov momentum (default: False)

Example:

>>> optimizer = torch.optim.SGD(model.parameters(), lr=0.1, momentum=0.9)

>>> optimizer.zero_grad()

>>> loss_fn(model(input), target).backward()

>>> optimizer.step()

__ http://www.cs.toronto.edu/%7Ehinton/absps/momentum.pdf

.. note::

The implementation of SGD with Momentum/Nesterov subtly differs from

Sutskever et. al. and implementations in some other frameworks.

Considering the specific case of Momentum, the update can be written as

.. math::

v = \rho * v + g \\

p = p - lr * v

where p, g, v and :math:`\rho` denote the parameters, gradient,

velocity, and momentum respectively.

This is in contrast to Sutskever et. al. and

other frameworks which employ an update of the form

.. math::

v = \rho * v + lr * g \\

p = p - v

The Nesterov version is analogously modified.

"""

def __init__(self, params, lr=required, momentum=0, dampening=0,

weight_decay=0, nesterov=False):

if lr is not required and lr 0.0:

raise ValueError("Invalid learning rate: {}".format(lr))

if momentum 0.0:

raise ValueError("Invalid momentum value: {}".format(momentum))

if weight_decay 0.0:

raise ValueError("Invalid weight_decay value: {}".format(weight_decay))

defaults = dict(lr=lr, momentum=momentum, dampening=dampening,

weight_decay=weight_decay, nesterov=nesterov)

if nesterov and (momentum = 0 or dampening != 0):

raise ValueError("Nesterov momentum requires a momentum and zero dampening")

super(SGD, self).__init__(params, defaults)

def __setstate__(self, state):

super(SGD, self).__setstate__(state)

for group in self.param_groups:

group.setdefault('nesterov', False)

def step(self, closure=None):

"""Performs a single optimization step.

Arguments:

closure (callable, optional): A closure that reevaluates the model

and returns the loss.

"""

loss = None

if closure is not None:

loss = closure()

for group in self.param_groups:

weight_decay = group['weight_decay']

momentum = group['momentum']

dampening = group['dampening']

nesterov = group['nesterov']

for p in group['params']:

if p.grad is None:

continue

d_p = p.grad.data

if weight_decay != 0:

d_p.add_(weight_decay, p.data)

if momentum != 0:

param_state = self.state[p]

if 'momentum_buffer' not in param_state:

buf = param_state['momentum_buffer'] = torch.zeros_like(p.data)

buf.mul_(momentum).add_(d_p)

else:

buf = param_state['momentum_buffer']

buf.mul_(momentum).add_(1 - dampening, d_p)

if nesterov:

d_p = d_p.add(momentum, buf)

else:

d_p = buf

p.data.add_(-group['lr'], d_p)

return loss

经验总结:

在Fine-tuning时最好不要隔层设置层的参数的可学习与否,这样做一般效果饼不理想,一般准则即可,即先Fine-tuning分类层,学习率设置的大一些,然后在将整个网络设置一个较小的学习率,所有层一起训练。

至于不先经过Fine-tune分类层,而是将整个网络所有层一起训练,只是分类层的学习率相对设置大一些,这样做也可以,至于哪个效果更好,没评估过。当用三元组损失(triplet loss)微调用softmax loss训练的网络时,可以设置阶梯型的较小学习率,整个网络所有层一起训练,效果比较好,而不用先Fine-tune分类层前一层的输出。

以上为个人经验,希望能给大家一个参考,也希望大家多多支持脚本之家。

您可能感兴趣的文章:- pytorch 实现将自己的图片数据处理成可以训练的图片类型

- pytorch 准备、训练和测试自己的图片数据的方法

- Pytorch自己加载单通道图片用作数据集训练的实例

- Pytorch之finetune使用详解

咨 询 客 服

咨 询 客 服