使用Scrapy爬取豆瓣某影星的所有个人图片

以莫妮卡·贝鲁奇为例

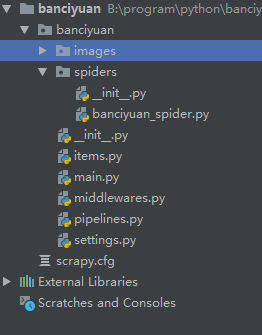

1.首先我们在命令行进入到我们要创建的目录,输入 scrapy startproject banciyuan 创建scrapy项目

创建的项目结构如下

2.为了方便使用pycharm执行scrapy项目,新建main.py

from scrapy import cmdline

cmdline.execute("scrapy crawl banciyuan".split())

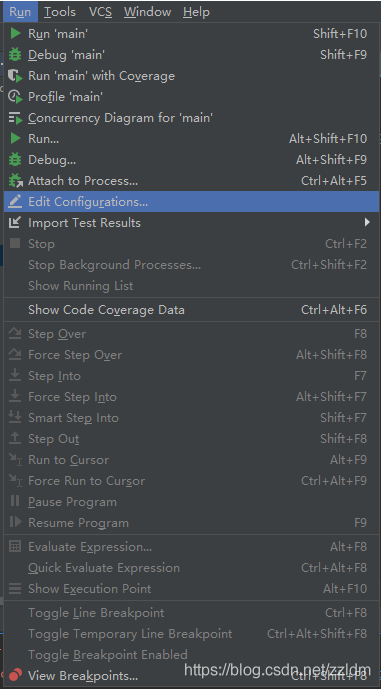

再edit configuration

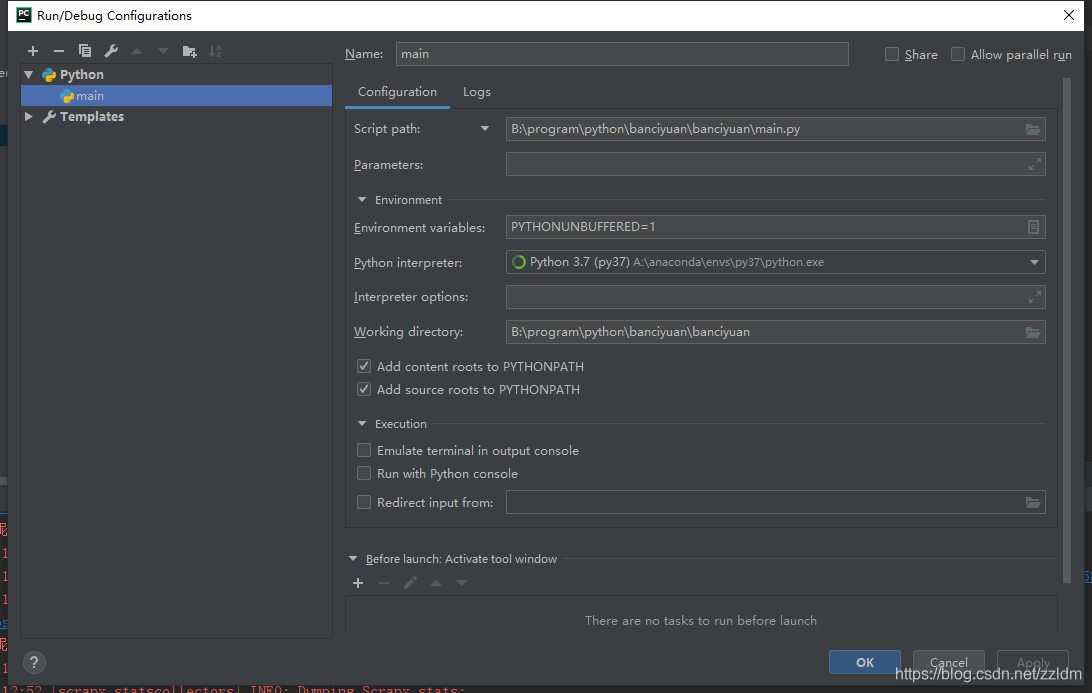

然后进行如下设置,设置后之后就能通过运行main.py运行scrapy项目了

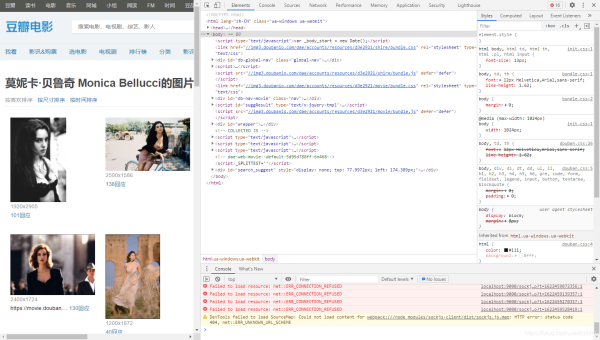

3.分析该HTML页面,创建对应spider

from scrapy import Spider

import scrapy

from banciyuan.items import BanciyuanItem

class BanciyuanSpider(Spider):

name = 'banciyuan'

allowed_domains = ['movie.douban.com']

start_urls = ["https://movie.douban.com/celebrity/1025156/photos/"]

url = "https://movie.douban.com/celebrity/1025156/photos/"

def parse(self, response):

num = response.xpath('//div[@class="paginator"]/a[last()]/text()').extract_first('')

print(num)

for i in range(int(num)):

suffix = '?type=Cstart=' + str(i * 30) + 'sortby=likesize=asubtype=a'

yield scrapy.Request(url=self.url + suffix, callback=self.get_page)

def get_page(self, response):

href_list = response.xpath('//div[@class="article"]//div[@class="cover"]/a/@href').extract()

# print(href_list)

for href in href_list:

yield scrapy.Request(url=href, callback=self.get_info)

def get_info(self, response):

src = response.xpath(

'//div[@class="article"]//div[@class="photo-show"]//div[@class="photo-wp"]/a[1]/img/@src').extract_first('')

title = response.xpath('//div[@id="content"]/h1/text()').extract_first('')

# print(response.body)

item = BanciyuanItem()

item['title'] = title

item['src'] = [src]

yield item

4.items.py

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class BanciyuanItem(scrapy.Item):

# define the fields for your item here like:

src = scrapy.Field()

title = scrapy.Field()

pipelines.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

from scrapy.pipelines.images import ImagesPipeline

import scrapy

class BanciyuanPipeline(ImagesPipeline):

def get_media_requests(self, item, info):

yield scrapy.Request(url=item['src'][0], meta={'item': item})

def file_path(self, request, response=None, info=None, *, item=None):

item = request.meta['item']

image_name = item['src'][0].split('/')[-1]

# image_name.replace('.webp', '.jpg')

path = '%s/%s' % (item['title'].split(' ')[0], image_name)

return path

settings.py

# Scrapy settings for banciyuan project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'banciyuan'

SPIDER_MODULES = ['banciyuan.spiders']

NEWSPIDER_MODULE = 'banciyuan.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.80 Safari/537.36'}

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'banciyuan.middlewares.BanciyuanSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'banciyuan.middlewares.BanciyuanDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'banciyuan.pipelines.BanciyuanPipeline': 1,

}

IMAGES_STORE = './images'

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

5.爬取结果

reference

源码

到此这篇关于Python爬虫实战之使用Scrapy爬取豆瓣图片的文章就介绍到这了,更多相关Scrapy爬取豆瓣图片内容请搜索脚本之家以前的文章或继续浏览下面的相关文章希望大家以后多多支持脚本之家!

您可能感兴趣的文章:- Python爬虫之教你利用Scrapy爬取图片

- Python爬取网站图片并保存的实现示例

- python制作微博图片爬取工具

- python绕过图片滑动验证码实现爬取PTA所有题目功能 附源码

- 利用python批量爬取百度任意类别的图片的实现方法

- Python使用xpath实现图片爬取

- Python Scrapy图片爬取原理及代码实例

- Python3直接爬取图片URL并保存示例

- python爬取某网站原图作为壁纸

- 用Python做一个哔站小姐姐词云跳舞视频

咨 询 客 服

咨 询 客 服