目录

- 一、环境准备

- 二、问题分析

- 三、spider

- 四、item

- 五、setting

- 六、pipelines

- 七、middlewares

- 八、使用jupyter进行简单的处理和分析

一、环境准备

- python3.8.3

- pycharm

- 项目所需第三方包

pip install scrapy fake-useragent requests selenium virtualenv -i https://pypi.douban.com/simple

1.1 创建虚拟环境

切换到指定目录创建

创建完记得激活虚拟环境

1.2 创建项目

1.3 使用pycharm打开项目,将创建的虚拟环境配置到项目中来

1.4 创建京东spider

scrapy genspider 爬虫名称 url

1.5 修改允许访问的域名,删除https:

二、问题分析

爬取数据的思路是先获取首页的基本信息,在获取详情页商品详细信息;爬取京东数据时,只返回40条数据,这里,作者使用selenium,在scrapy框架中编写下载器中间件,返回页面所有数据。

爬取的字段分别是:

商品价格

商品评数

商品店家

商品SKU(京东可直接搜索到对应的产品)

商品标题

商品详细信息

三、spider

import re

import scrapy

from lianjia.items import jd_detailItem

class JiComputerDetailSpider(scrapy.Spider):

name = 'ji_computer_detail'

allowed_domains = ['search.jd.com', 'item.jd.com']

start_urls = [

'https://search.jd.com/Search?keyword=%E7%AC%94%E8%AE%B0%E6%9C%AC%E7%94%B5%E8%84%91suggest=1.def.0.basewq=%E7%AC%94%E8%AE%B0%E6%9C%AC%E7%94%B5%E8%84%91page=1s=1click=0']

def parse(self, response):

lls = response.xpath('//ul[@class="gl-warp clearfix"]/li')

for ll in lls:

item = jd_detailItem()

computer_price = ll.xpath('.//div[@class="p-price"]/strong/i/text()').extract_first()

computer_commit = ll.xpath('.//div[@class="p-commit"]/strong/a/text()').extract_first()

computer_p_shop = ll.xpath('.//div[@class="p-shop"]/span/a/text()').extract_first()

item['computer_price'] = computer_price

item['computer_commit'] = computer_commit

item['computer_p_shop'] = computer_p_shop

meta = {

'item': item

}

shop_detail_url = ll.xpath('.//div[@class="p-img"]/a/@href').extract_first()

shop_detail_url = 'https:' + shop_detail_url

yield scrapy.Request(url=shop_detail_url, callback=self.detail_parse, meta=meta)

for i in range(2, 200, 2):

next_page_url = f'https://search.jd.com/Search?keyword=%E7%AC%94%E8%AE%B0%E6%9C%AC%E7%94%B5%E8%84%91suggest=1.def.0.basewq=%E7%AC%94%E8%AE%B0%E6%9C%AC%E7%94%B5%E8%84%91page={i}s=116click=0'

yield scrapy.Request(url=next_page_url, callback=self.parse)

def detail_parse(self, response):

item = response.meta.get('item')

computer_sku = response.xpath('//a[@class="notice J-notify-sale"]/@data-sku').extract_first()

item['computer_sku'] = computer_sku

computer_title = response.xpath('//div[@class="sku-name"]/text()').extract_first().strip()

computer_title = ''.join(re.findall('\S', computer_title))

item['computer_title'] = computer_title

computer_detail = response.xpath('string(//ul[@class="parameter2 p-parameter-list"])').extract_first().strip()

computer_detail = ''.join(re.findall('\S', computer_detail))

item['computer_detail'] = computer_detail

yield item

四、item

class jd_detailItem(scrapy.Item):

# define the fields for your item here like:

computer_sku = scrapy.Field()

computer_price = scrapy.Field()

computer_title = scrapy.Field()

computer_commit = scrapy.Field()

computer_p_shop = scrapy.Field()

computer_detail = scrapy.Field()

五、setting

import random

from fake_useragent import UserAgent

ua = UserAgent()

USER_AGENT = ua.random

ROBOTSTXT_OBEY = False

DOWNLOAD_DELAY = random.uniform(0.5, 1)

DOWNLOADER_MIDDLEWARES = {

'lianjia.middlewares.jdDownloaderMiddleware': 543

}

ITEM_PIPELINES = {

'lianjia.pipelines.jd_csv_Pipeline': 300

}

六、pipelines

class jd_csv_Pipeline:

# def process_item(self, item, spider):

# return item

def open_spider(self, spider):

self.fp = open('./jd_computer_message.xlsx', mode='w+', encoding='utf-8')

self.fp.write('computer_sku\tcomputer_title\tcomputer_p_shop\tcomputer_price\tcomputer_commit\tcomputer_detail\n')

def process_item(self, item, spider):

# 写入文件

try:

line = '\t'.join(list(item.values())) + '\n'

self.fp.write(line)

return item

except:

pass

def close_spider(self, spider):

# 关闭文件

self.fp.close()

七、middlewares

class jdDownloaderMiddleware:

def process_request(self, request, spider):

# 判断是否是ji_computer_detail的爬虫

# 判断是否是首页

if spider.name == 'ji_computer_detail' and re.findall(f'.*(item.jd.com).*', request.url) == []:

options = ChromeOptions()

options.add_argument("--headless")

driver = webdriver.Chrome(options=options)

driver.get(request.url)

for i in range(0, 15000, 5000):

driver.execute_script(f'window.scrollTo(0, {i})')

time.sleep(0.5)

body = driver.page_source.encode()

time.sleep(1)

return HtmlResponse(url=request.url, body=body, request=request)

return None

八、使用jupyter进行简单的处理和分析

其他文件:百度停用词库、简体字文件

下载第三方包

!pip install seaborn jieba wordcloud PIL -i https://pypi.douban.com/simple

8.1导入第三方包

import re

import os

import jieba

import wordcloud

import pandas as pd

import numpy as np

from PIL import Image

import seaborn as sns

from docx import Document

from docx.shared import Inches

import matplotlib.pyplot as plt

from pandas import DataFrame,Series

8.2设置可视化的默认字体和seaborn的样式

sns.set_style('darkgrid')

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

8.3读取数据

df_jp = pd.read_excel('./jd_shop.xlsx')

8.4筛选Inteli5、i7、i9处理器数据

def convert_one(s):

if re.findall(f'.*?(i5).*', str(s)) != []:

return re.findall(f'.*?(i5).*', str(s))[0]

elif re.findall(f'.*?(i7).*', str(s)) != []:

return re.findall(f'.*?(i7).*', str(s))[0]

elif re.findall(f'.*?(i9).*', str(s)) != []:

return re.findall(f'.*?(i9).*', str(s))[0]

df_jp['computer_intel'] = df_jp['computer_detail'].map(convert_one)

8.5筛选笔记本电脑的屏幕尺寸范围

def convert_two(s):

if re.findall(f'.*?(\d+\.\d+英寸-\d+\.\d+英寸).*', str(s)) != []:

return re.findall(f'.*?(\d+\.\d+英寸-\d+\.\d+英寸).*', str(s))[0]

df_jp['computer_in'] = df_jp['computer_detail'].map(convert_two)

8.6将评论数转化为整形

def convert_three(s):

if re.findall(f'(\d+)万+', str(s)) != []:

number = int(re.findall(f'(\d+)万+', str(s))[0]) * 10000

return number

elif re.findall(f'(\d+)+', str(s)) != []:

number = re.findall(f'(\d+)+', str(s))[0]

return number

df_jp['computer_commit'] = df_jp['computer_commit'].map(convert_three)

8.7筛选出需要分析的品牌

def find_computer(name, s):

sr = re.findall(f'.*({name}).*', str(s))[0]

return sr

def convert(s):

if re.findall(f'.*(联想).*', str(s)) != []:

return find_computer('联想', s)

elif re.findall(f'.*(惠普).*', str(s)) != []:

return find_computer('惠普', s)

elif re.findall(f'.*(华为).*', str(s)) != []:

return find_computer('华为', s)

elif re.findall(f'.*(戴尔).*', str(s)) != []:

return find_computer('戴尔', s)

elif re.findall(f'.*(华硕).*', str(s)) != []:

return find_computer('华硕', s)

elif re.findall(f'.*(小米).*', str(s)) != []:

return find_computer('小米', s)

elif re.findall(f'.*(荣耀).*', str(s)) != []:

return find_computer('荣耀', s)

elif re.findall(f'.*(神舟).*', str(s)) != []:

return find_computer('神舟', s)

elif re.findall(f'.*(外星人).*', str(s)) != []:

return find_computer('外星人', s)

df_jp['computer_p_shop'] = df_jp['computer_p_shop'].map(convert)

8.8删除指定字段为空值的数据

for n in ['computer_price', 'computer_commit', 'computer_p_shop', 'computer_sku', 'computer_detail', 'computer_intel', 'computer_in']:

index_ls = df_jp[df_jp[[n]].isnull().any(axis=1)==True].index

df_jp.drop(index=index_ls, inplace=True)

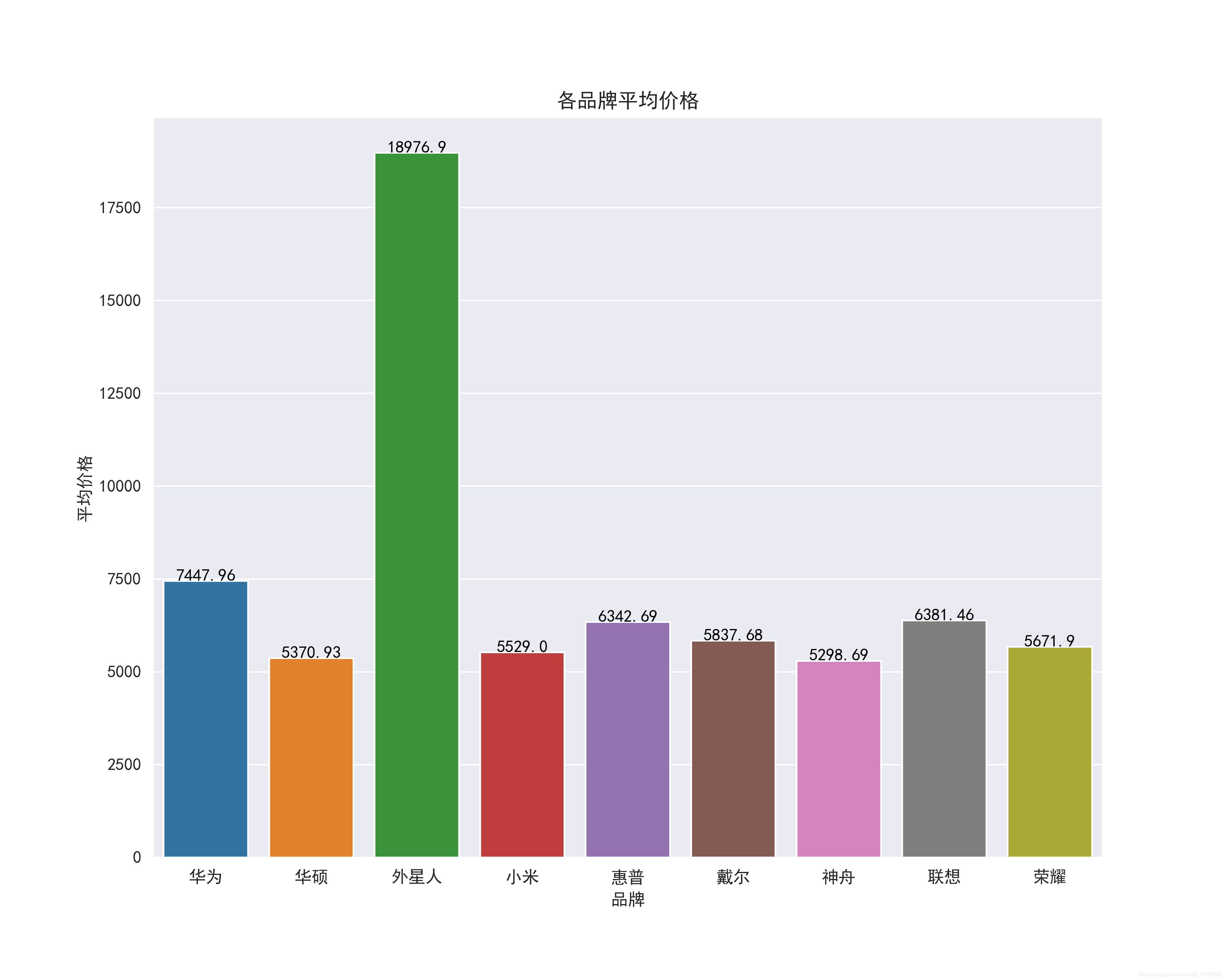

8.9查看各品牌的平均价格

plt.figure(figsize=(10, 8), dpi=100)

ax = sns.barplot(x='computer_p_shop', y='computer_price', data=df_jp.groupby(by='computer_p_shop')[['computer_price']].mean().reset_index())

for index,row in df_jp.groupby(by='computer_p_shop')[['computer_price']].mean().reset_index().iterrows():

ax.text(row.name,row['computer_price'] + 2,round(row['computer_price'],2),color="black",ha="center")

ax.set_xlabel('品牌')

ax.set_ylabel('平均价格')

ax.set_title('各品牌平均价格')

boxplot_fig = ax.get_figure()

boxplot_fig.savefig('各品牌平均价格.png', dpi=400)

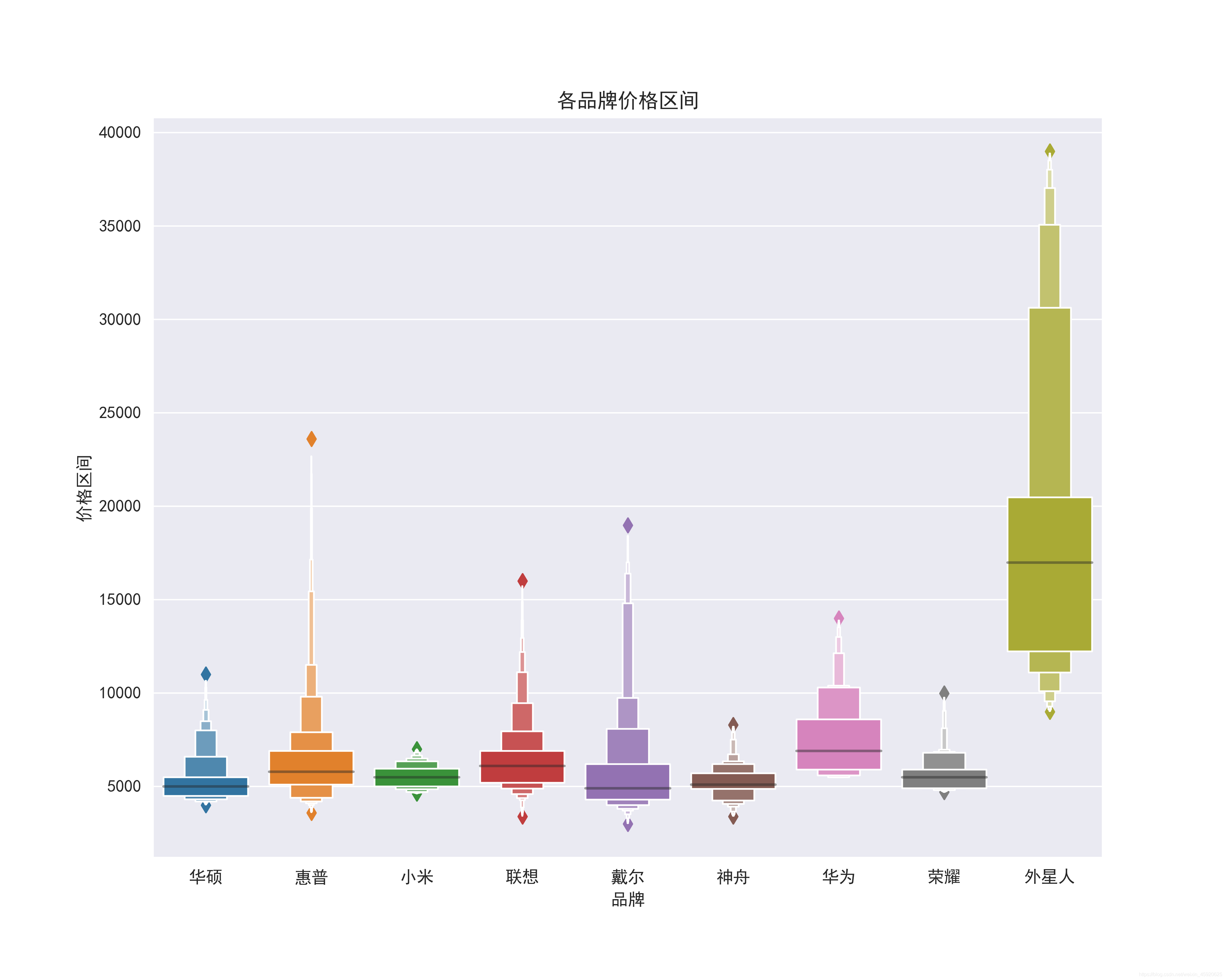

8.10 查看各品牌的价格区间

plt.figure(figsize=(10, 8), dpi=100)

ax = sns.boxenplot(x='computer_p_shop', y='computer_price', data=df_jp.query('computer_price>500'))

ax.set_xlabel('品牌')

ax.set_ylabel('价格区间')

ax.set_title('各品牌价格区间')

boxplot_fig = ax.get_figure()

boxplot_fig.savefig('各品牌价格区间.png', dpi=400)

8.11 查看价格与评论数的关系

df_jp['computer_commit'] = df_jp['computer_commit'].astype('int64')

ax = sns.jointplot(x="computer_commit", y="computer_price", data=df_jp, kind="reg", truncate=False,color="m", height=10)

ax.fig.savefig('评论数与价格的关系.png')

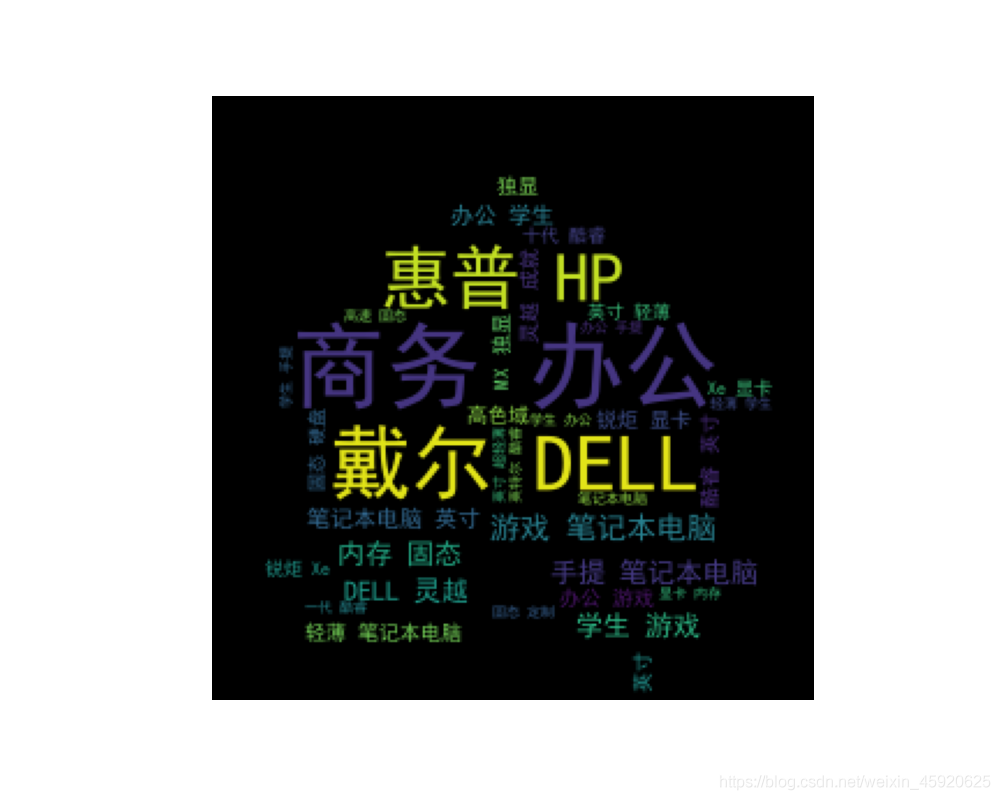

8.12 查看商品标题里出现的关键词

import imageio

# 将特征转换为列表

ls = df_jp['computer_title'].to_list()

# 替换非中英文的字符

feature_points = [re.sub(r'[^a-zA-Z\u4E00-\u9FA5]+',' ',str(feature)) for feature in ls]

# 读取停用词

stop_world = list(pd.read_csv('./百度停用词表.txt', engine='python', encoding='utf-8', names=['stopwords'])['stopwords'])

feature_points2 = []

for feature in feature_points: # 遍历每一条评论

words = jieba.lcut(feature) # 精确模式,没有冗余.对每一条评论进行jieba分词

ind1 = np.array([len(word) > 1 for word in words]) # 判断每个分词的长度是否大于1

ser1 = pd.Series(words)

ser2 = ser1[ind1] # 筛选分词长度大于1的分词留下

ind2 = ~ser2.isin(stop_world) # 注意取反负号

ser3 = ser2[ind2].unique() # 筛选出不在停用词表的分词留下,并去重

if len(ser3) > 0:

feature_points2.append(list(ser3))

# 将所有分词存储到一个列表中

wordlist = [word for feature in feature_points2 for word in feature]

# 将列表中所有的分词拼接成一个字符串

feature_str = ' '.join(wordlist)

# 标题分析

font_path = r'./simhei.ttf'

shoes_box_jpg = imageio.imread('./home.jpg')

wc=wordcloud.WordCloud(

background_color='black',

mask=shoes_box_jpg,

font_path = font_path,

min_font_size=5,

max_font_size=50,

width=260,

height=260,

)

wc.generate(feature_str)

plt.figure(figsize=(10, 8), dpi=100)

plt.imshow(wc)

plt.axis('off')

plt.savefig('标题提取关键词')

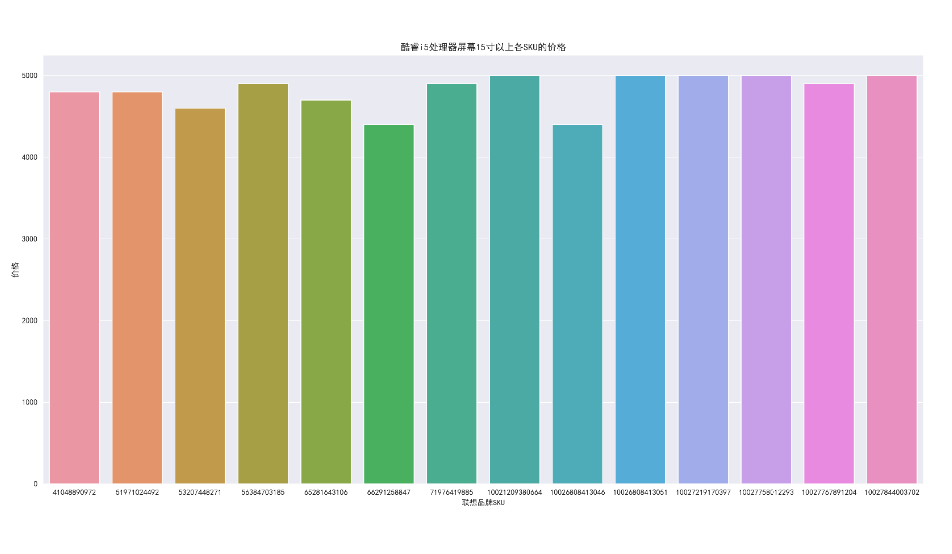

8.13 筛选价格在4000到5000,联想品牌、处理器是i5、屏幕大小在15寸以上的数据并查看价格

df_jd_query = df_jp.loc[(df_jp['computer_price'] =5000) (df_jp['computer_price']>=4000) (df_jp['computer_p_shop']=="联想") (df_jp['computer_intel']=="i5") (df_jp['computer_in']=="15.0英寸-15.9英寸"), :].copy()

plt.figure(figsize=(20, 10), dpi=100)

ax = sns.barplot(x='computer_sku', y='computer_price', data=df_jd_query)

ax.set_xlabel('联想品牌SKU')

ax.set_ylabel('价格')

ax.set_title('酷睿i5处理器屏幕15寸以上各SKU的价格')

boxplot_fig = ax.get_figure()

boxplot_fig.savefig('酷睿i5处理器屏幕15寸以上各SKU的价格.png', dpi=400)

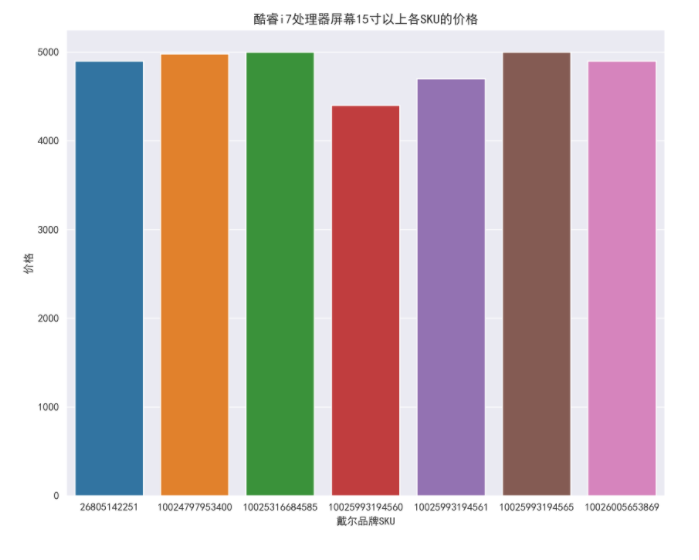

8.14 筛选价格在4000到5000,戴尔品牌、处理器是i7、屏幕大小在15寸以上的数据并查看价格

df_jp_daier = df_jp.loc[(df_jp['computer_price'] =5000) (df_jp['computer_price']>=4000) (df_jp['computer_p_shop']=="戴尔") (df_jp['computer_intel']=="i7") (df_jp['computer_in']=="15.0英寸-15.9英寸"), :].copy()

plt.figure(figsize=(10, 8), dpi=100)

ax = sns.barplot(x='computer_sku', y='computer_price', data=df_jp_daier)

ax.set_xlabel('戴尔品牌SKU')

ax.set_ylabel('价格')

ax.set_title('酷睿i7处理器屏幕15寸以上各SKU的价格')

boxplot_fig = ax.get_figure()

boxplot_fig.savefig('酷睿i7处理器屏幕15寸以上各SKU的价格.png', dpi=400)

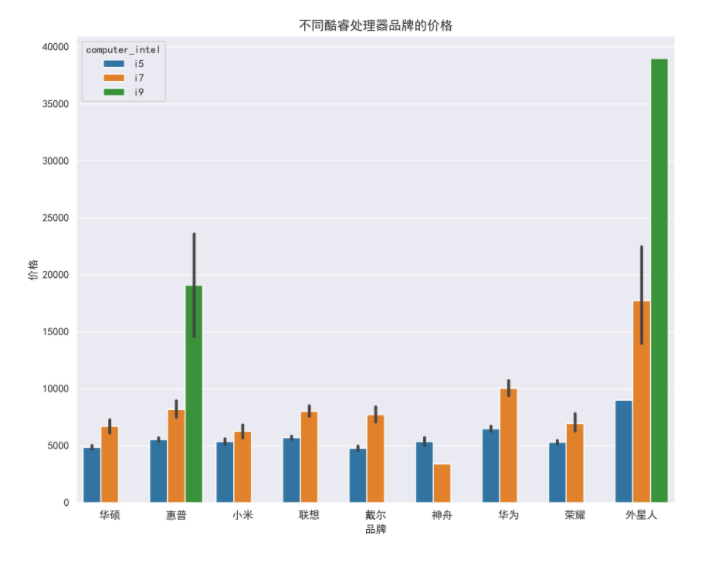

8.15 不同Intel处理器品牌的价格

plt.figure(figsize=(10, 8), dpi=100)

ax = sns.barplot(x='computer_p_shop', y='computer_price', data=df_jp, hue='computer_intel')

ax.set_xlabel('品牌')

ax.set_ylabel('价格')

ax.set_title('不同酷睿处理器品牌的价格')

boxplot_fig = ax.get_figure()

boxplot_fig.savefig('不同酷睿处理器品牌的价格.png', dpi=400)

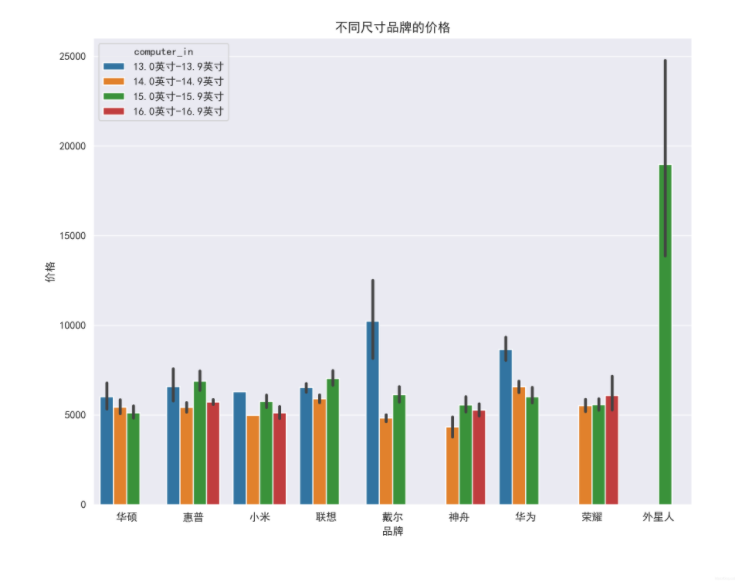

8.16 不同尺寸品牌的价格

plt.figure(figsize=(10, 8), dpi=100)

ax = sns.barplot(x='computer_p_shop', y='computer_price', data=df_jp, hue='computer_in')

ax.set_xlabel('品牌')

ax.set_ylabel('价格')

ax.set_title('不同尺寸品牌的价格')

boxplot_fig = ax.get_figure()

boxplot_fig.savefig('不同尺寸品牌的价格.png', dpi=400)

以上就是python基于scrapy爬取京东笔记本电脑数据并进行简单处理和分析的详细内容,更多关于python 爬取京东数据的资料请关注脚本之家其它相关文章!

您可能感兴趣的文章:- Android实现京东首页效果

- JS实现京东商品分类侧边栏

- 利用JavaScript模拟京东按键输入功能

- 仿京东平台框架开发开放平台(包含需求,服务端代码,SDK代码)

咨 询 客 服

咨 询 客 服